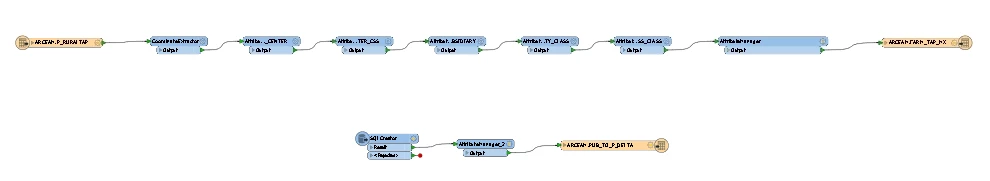

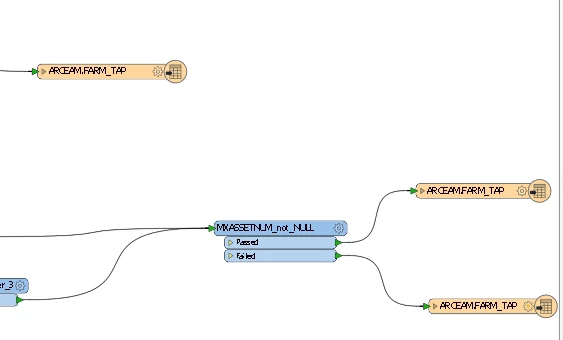

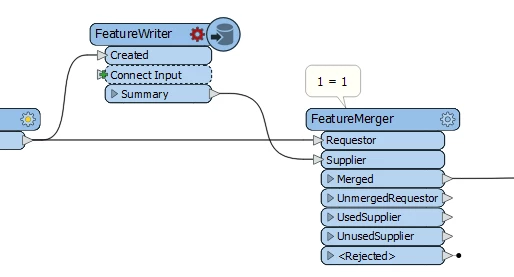

I have two separate flows for a process as shown below (upper and lower). These two flows are separate but it is required that the lower workflow must be executed after the upper workflow has executed. To handle this, I have created two separate workbenches for each flow and i am calling the second workbench after the upper workbench has been run. Is there any way, I can merge these two workflows in a single workbench and have control on which workflow executes first?