Calling all FME Community Members!

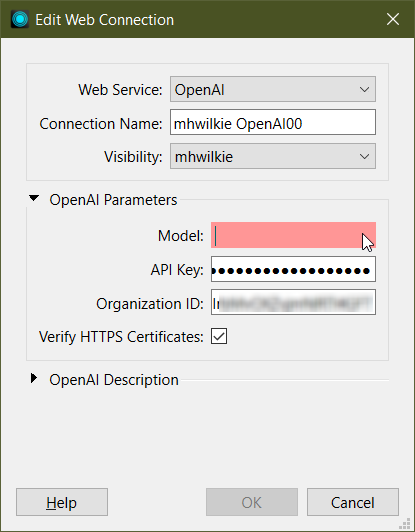

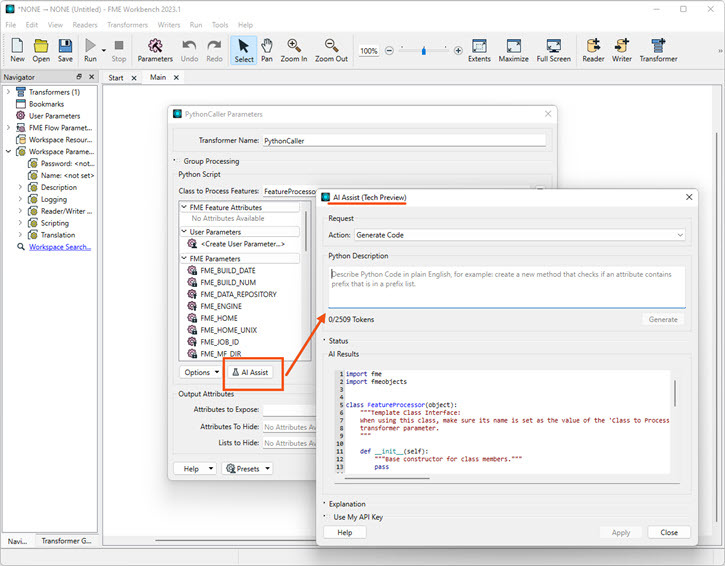

With the official release of FME 2023.1 comes a brand new AI-powered tool: AI Assist. This new AI feature can be found as a component of our Regex, SQL, and Python editors, which accompany a variety of our transformers and formats. AI Assist is ready and available to help you generate code for your queries or custom functionality in FME.

We encourage you to make use of this Megathread to provide your feedback and discuss your experiences with FME's new AI Assist. Let us and your fellow FME Community members know how your testing goes, including your use case, any struggles or aha moments, and of course your tips for success.

You can also give your fellow Community members a heads-up about any bugs you discover. Please also report these bugs directly to Safe Software Technical Support by creating a support case .

Check out our on-demand FME 2023.1 release webinar for a demo of AI Assist, and our AI Assist FAQ page for more details on this capability!

Just keep in mind that AI Assist is in Tech Preview status, and should not be considered production-ready at this time.

We look forward to hearing about your experiences with FME's new AI Assist!

Happy testing!