Hello FME Community,

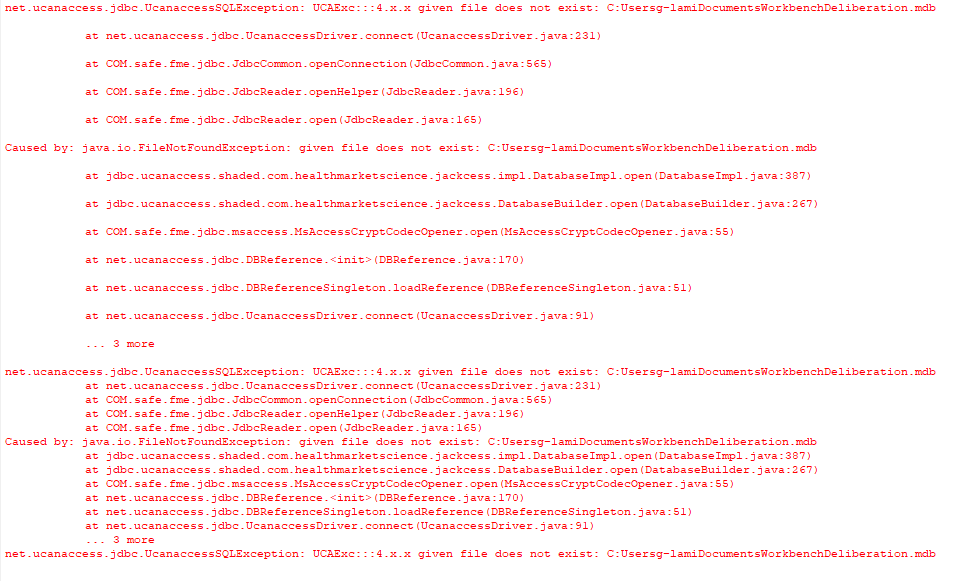

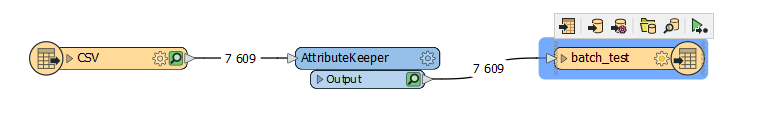

I have been working with batch files and all goes well when my reader and writter are in posgtresql but when my reader is in access(.mdb) file or excel it causes problems,it says that it cannt find the file where the file already exist and the strange things is that it worked once. When i created a new batch with same workflow and same file i get the following error. I seems a problem of url as the \\ are removed but i dont undarstand the cause of it.

I'm attaching the .tcl files for the the workbench that works and doesnt work( they are exactly the same apart the name). The file that ends with 22 doesnt work and it has less code than the other one.

I'm attaching the .tcl files for the the workbench that works and doesnt work( they are exactly the same apart the name). The file that ends with 22 doesnt work and it has less code than the other one.

Thanks in advance for your help.

and i get this error :

and i get this error :