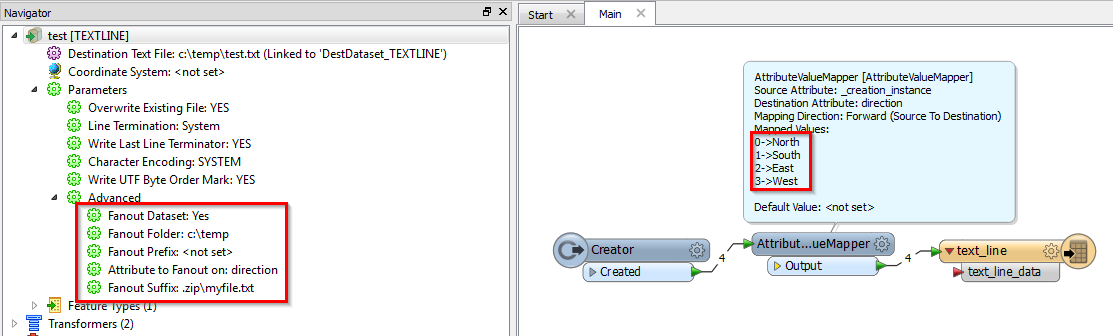

I'm trying to fanout GML files into multiple ZIP files, where the GML file always has the same name but the ZIP file name is controlled by an attribute. So I want:

test.gml\\North.zip

test.gml\\South.zip

test.gml\\East.zip

test.gml\\West.zip

It appears that the fanout dataset capability doesn't allow me to do this and I can only create many differently named GML files in the same ZIP.

Does anyone know a way around this?

Thanks in advance, Dave