Hi,

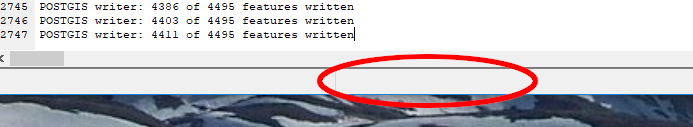

My problem is about a process that update a postgis table from an other geographic database. Sometimes the import stop working nearly at the end as you can see :

POSTGIS writer: 6589 of 6765 features written

POSTGIS writer: 6601 of 6765 features written

POSTGIS writer: 6606 of 6765 features written

POSTGIS writer: 6628 of 6765 features written

POSTGIS writer: 6649 of 6765 features written

and then, no more log for hours.

The fact is that it doesn't seems to come from data, cause when i start the process by little packet sometimes it works, actually this slowness seems to be unpredictable. But always occur at the end of the process. And yes computer is working and doesn't seems to be saturated : Proc 8%; Memory 21%.

Does someone have any idea of what can i try to understand what happen ??

Thanks