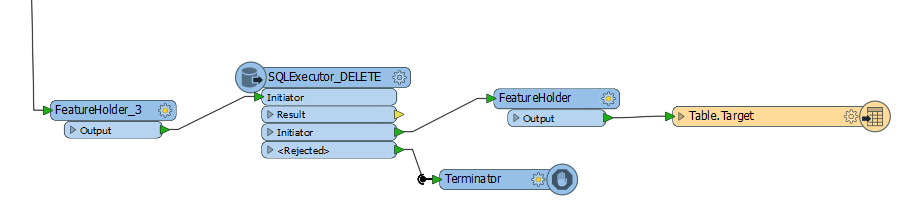

Hi! I am writing an FME script to insert records into target table. Before inserting a record, I want to delete the record has the same ID in database, if it exists. I made an FME workspace as screenshot to do run a DELETE T-SQL command for each feature, such that

- all deletions are performed before any insertion.

- the translation will terminate if any of the deletion fails.

However, I considered the following hypothetical scenarios (in practice I never seen any of them happened, but still possible).

- The 100th feature fails in the SQLExecutor, such that the translation ends with 99 records deleted from database.

- All feature passes SQLExecutor, but the first feature failed in the writer, such that the translation ends with all deletions done, but not insertion.

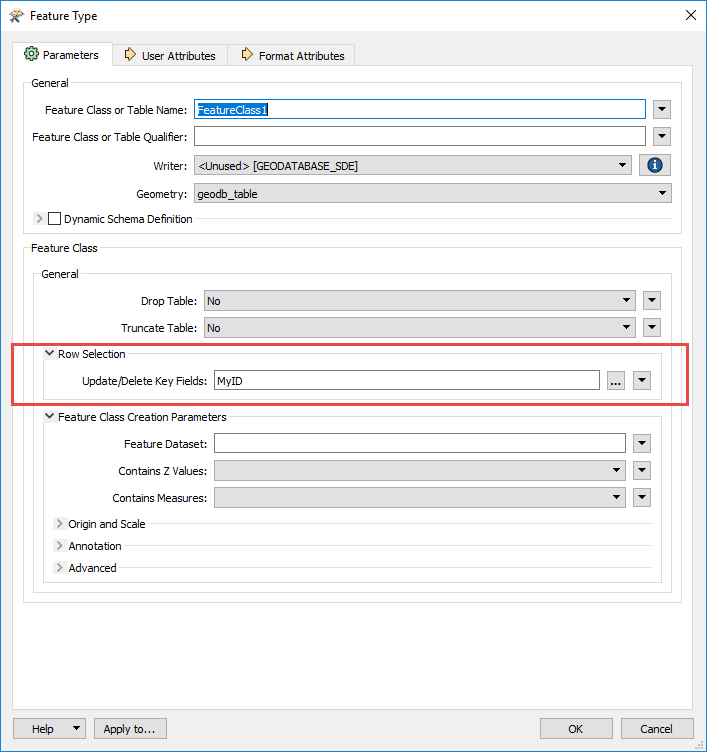

Considering the importance of data integrity. Is it possible for me to change the workspace, such that:

- If any feature fails in SQLExecutor transformer, then rollback all the changes made by the same SQLExecutor?

- If any feature fails in writer, then rollback all the deletions that has been done?

- If any feature fails in writer, then rollback all the features inserted in the same translation before?

Improving any of these will help. Thank you!

More details: working with SQL Server with Non-versioned SDE database.