Hi All,

I have a workbench built in FME Desktop 2019.1 which I am planning on deploying to FME Server 2019.1. This workbench does incremental updates to records in a SQL Server database on the basis of updates delivered in XML payloads. The workbench consists of an XML reader, various transformers to transform XML data into the required format, and a SQLExecutor to run an 'upsert' in SQL Server (we elected not to use a SQL Server Writer). XML payloads are delivered to a directory on a file share of some kind (probably FTP, maybe SMB - this is an implementation detail, I can map the directory as a network drive of some kind if required). We do not need to handle any kind of geospatial data.

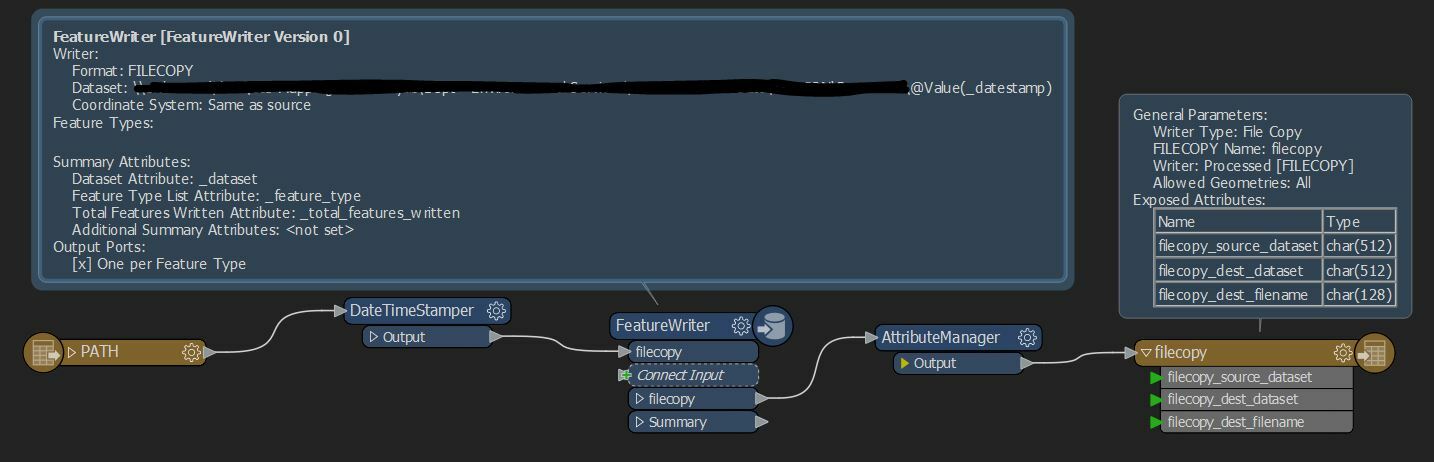

What I am trying to do is determine a way to trigger the workbench in FME Server by a file being dropped into a directory, and then, once the workbench has run, move the file to another archive directory. I can see that triggering an Automation in FME Server on the basis of a change in a directory is not overly complicated, but the action of moving the file when finished is not clear to me.

I am very open to suggestions on this as I have not yet been able to find any way to do something like this. I am not locked into this particular read-then-archive workflow either - all I need to do is make sure FME Server will not read the same files in the directory again after it has successfully processed them once. The workbench is not set in stone either, I can make changes here if necessary.

Thanks in advance!

This moves the file out of the Input folder into the new date folder in Processed , leaving the Input folder ready for the next file to be dropped in.

This moves the file out of the Input folder into the new date folder in Processed , leaving the Input folder ready for the next file to be dropped in.