Hello everyone

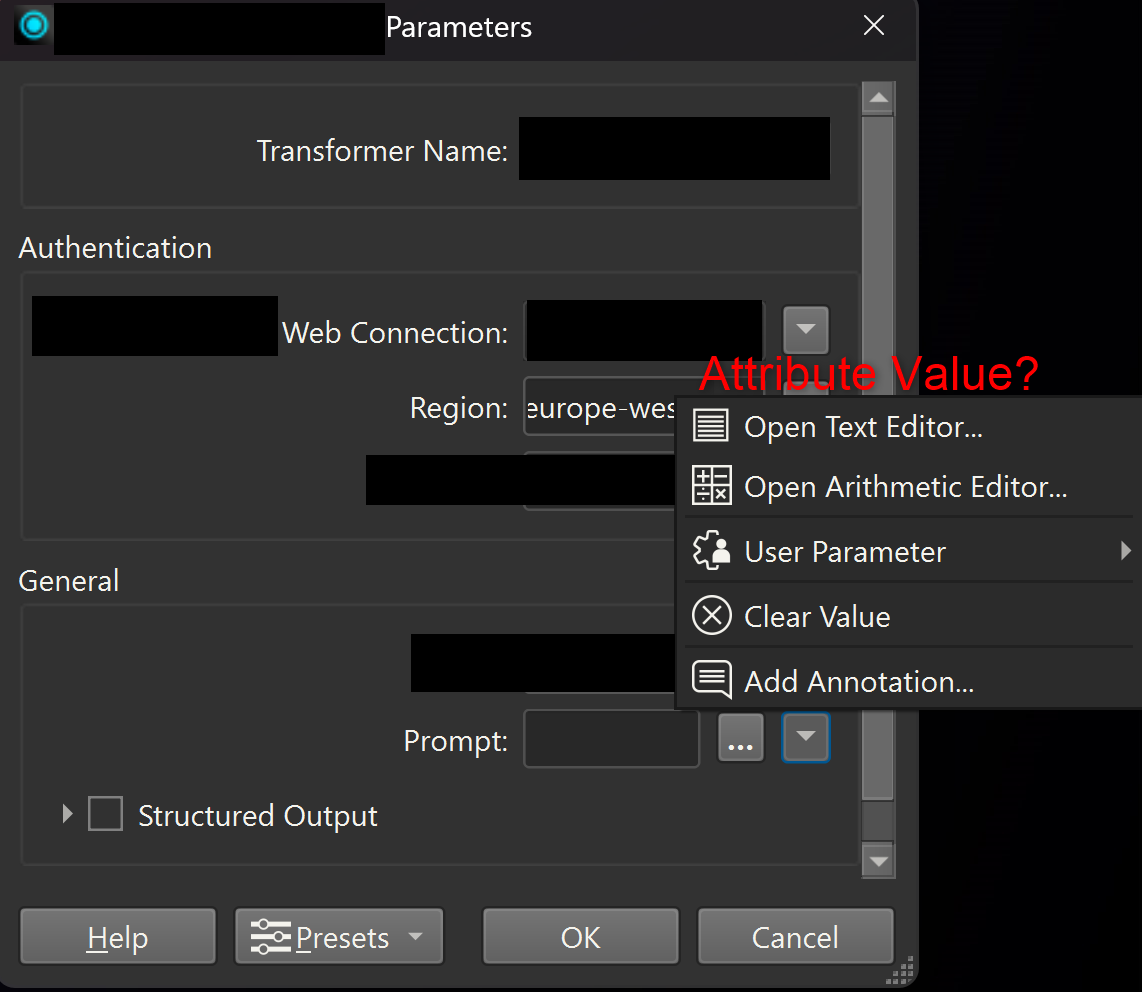

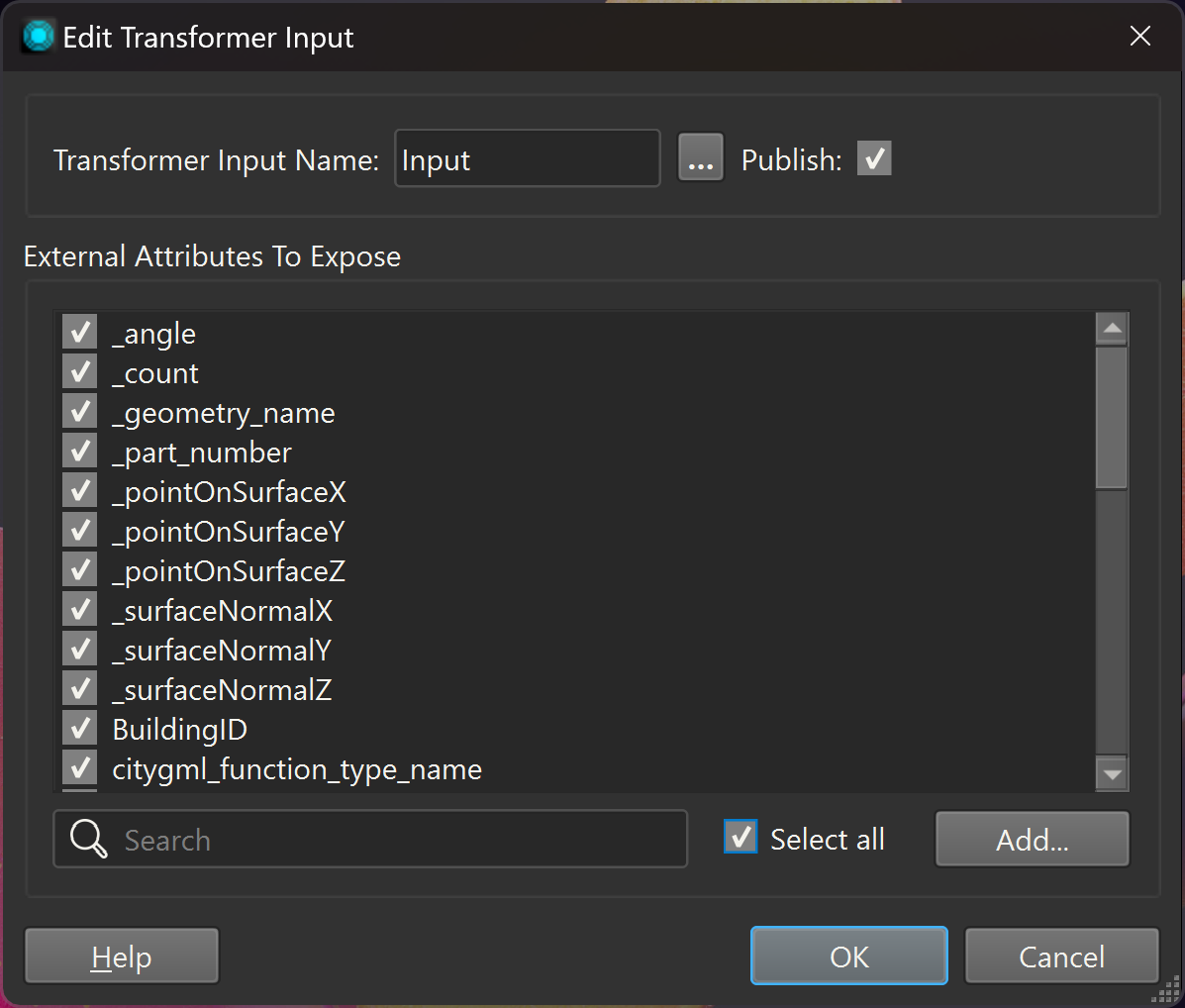

I've been designing a new custom transformer to sum up part of my workflow (in FME Form 2025.0). The issue is that I can't add any of my attributes to the text editor even though all attributes are selected to be exposed in the input parametres.

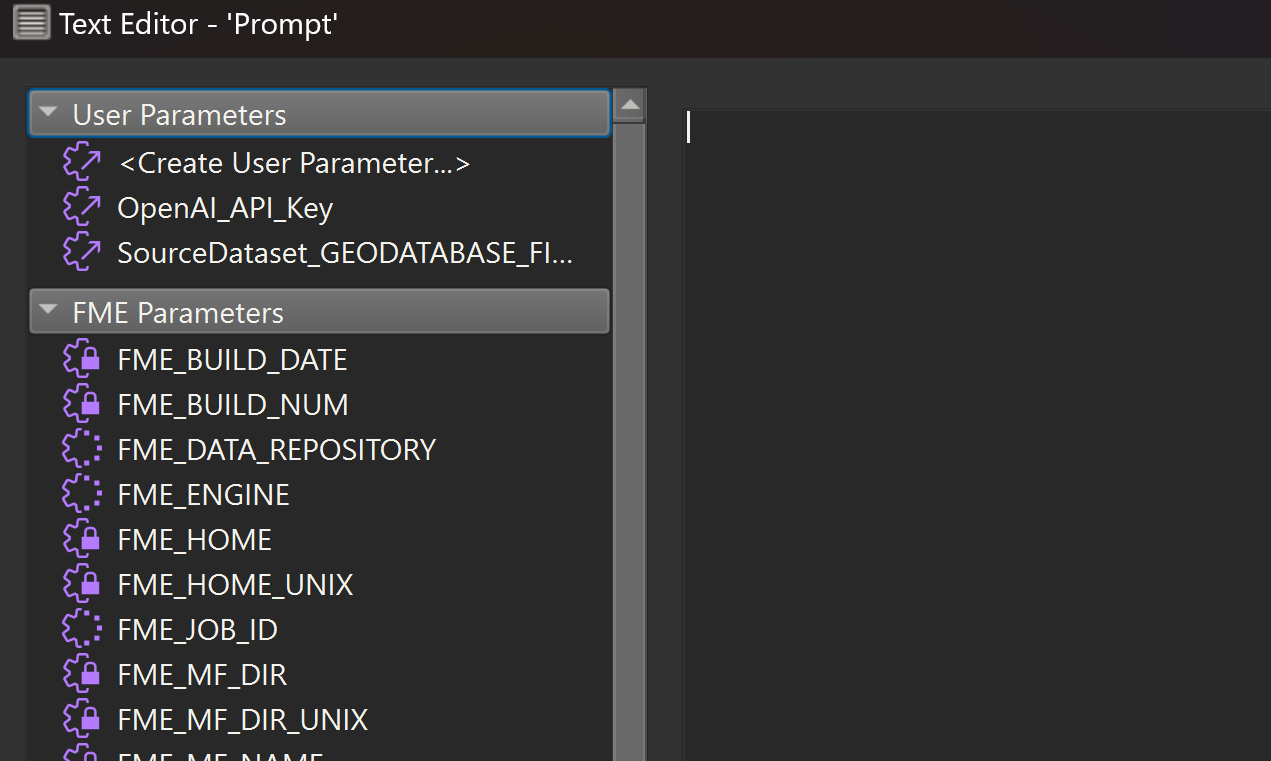

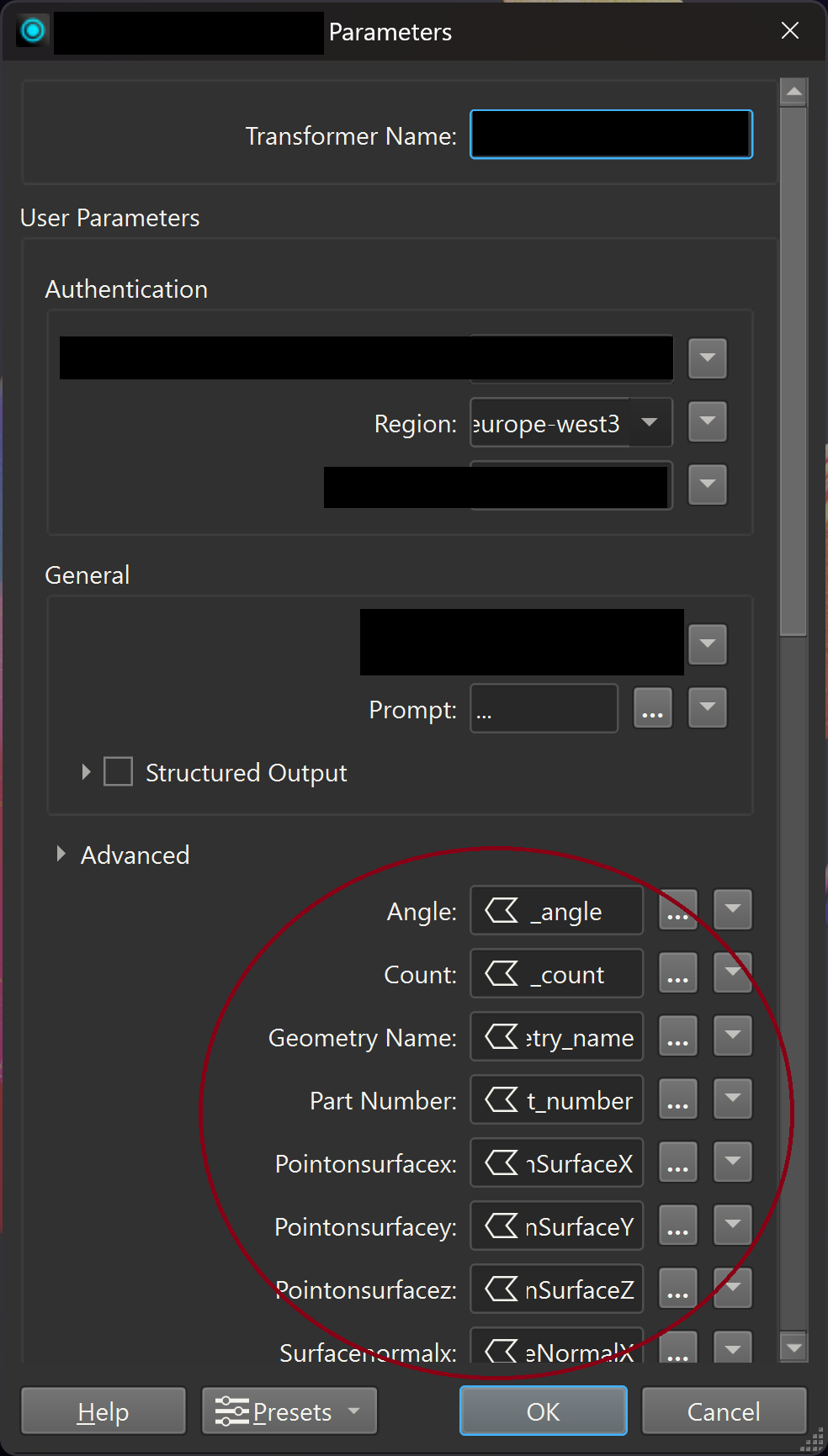

Moreso, If I edit it individually, no attributes appear (as obvious), but if I edit them inside a workbench session and I choose specific attributes to expose, they are still not availaible in the text editor, but they get instead automatically added as user parametres for the custom transformer. Can anyone explain me how this works? I don't get a clear answer from looking at https://docs.safe.com/fme/2025.0/html/FME-Form-Documentation/FME-Form/Workbench/Using_Custom_Transformers.htm#Editing_Input_Output_Ports

It also irritates me that it’s theoretically a copy of another transformer with a couple of changes (only adding transformers or changing their parametres, none with the inputs) and that transformer allows to add feature attributes values by any prompt.