I am getting some strange spikey artefacts from ContourGenerator. The dataset is a goverment isued DTM, pictured are some sea islands. The data is preprocessed with rastercellrounding and a notatasetter to ensure that the sea surface did not hold any data.

Settings as follows:

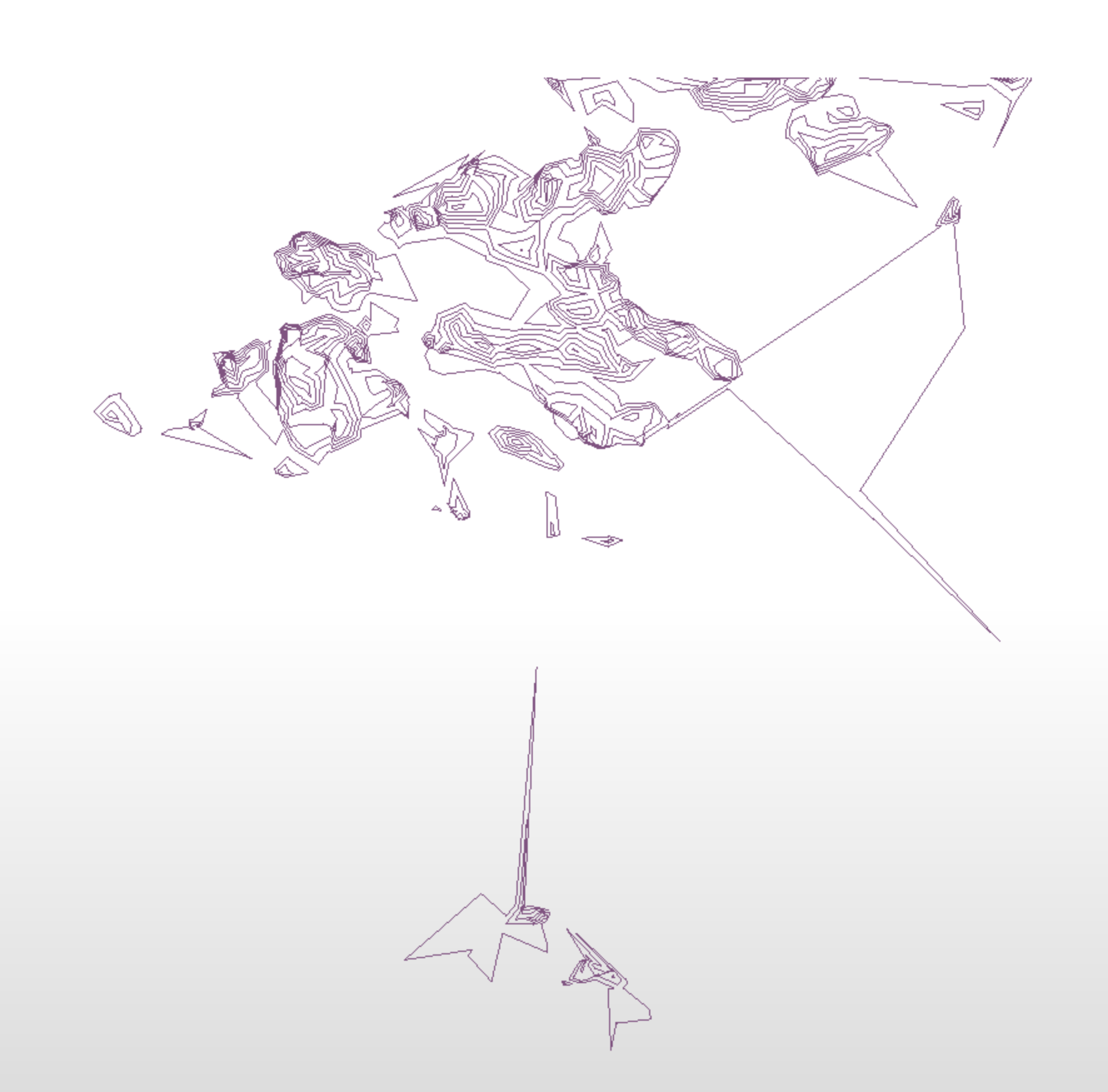

First image elev 2.5 tolerance 5

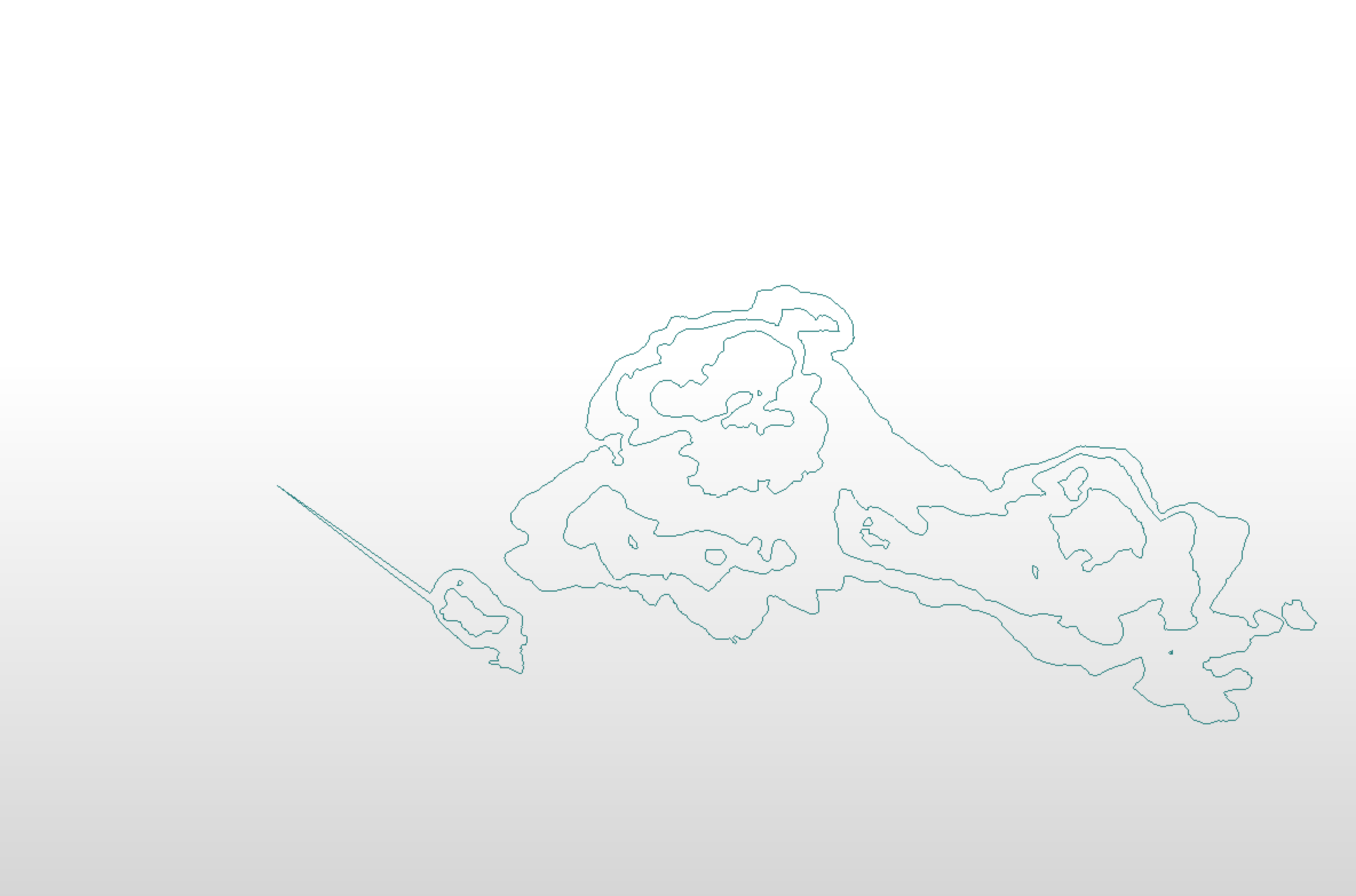

Second image elev 2.5 tolerance 0

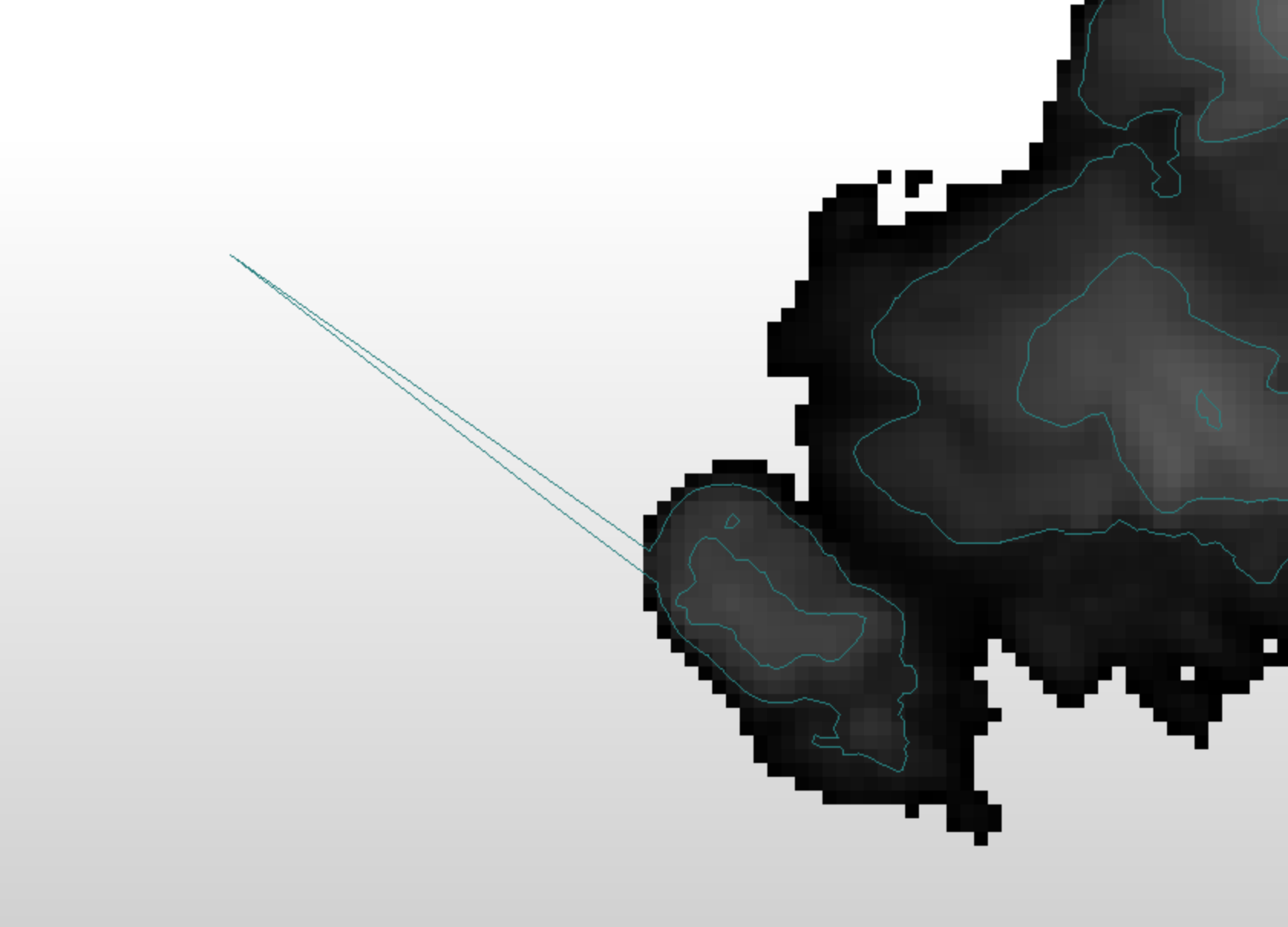

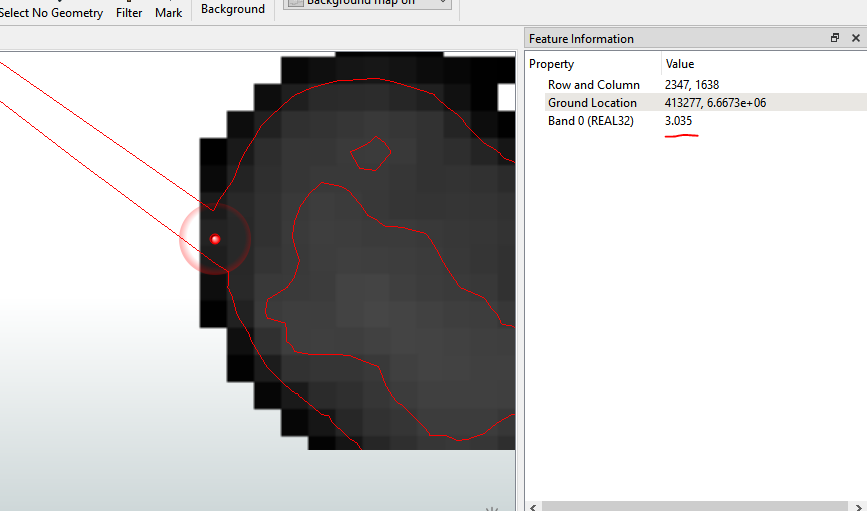

Third image is a close up of the the second (elev 2.5 tolerance 0)

Any ideas on how to deal with this?

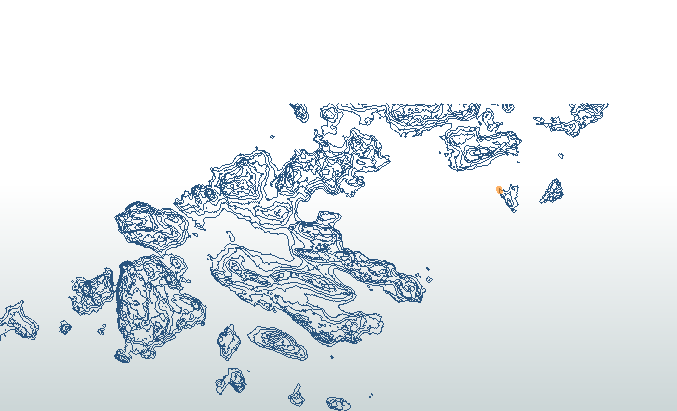

One thing which I played around with was to first set NoData to be -0.15 (as it is in the data) Then use the RasterExtentsCoercer (set to Data Extents) to extract polygons, buffer by 5 meters, dissolve to remove overlaps and then clip the input raster into 70 or so parts. Use a counter to add an id to each rater part and then run it though the CountourGenerator (grouping by the id). The whole process is still considerably faster than running the whole image through the contour generator. (15 seconds vs 50 seconds) and the result it pretty similar. The benefit of this approach lets you keep the 0 meter contour.

One thing which I played around with was to first set NoData to be -0.15 (as it is in the data) Then use the RasterExtentsCoercer (set to Data Extents) to extract polygons, buffer by 5 meters, dissolve to remove overlaps and then clip the input raster into 70 or so parts. Use a counter to add an id to each rater part and then run it though the CountourGenerator (grouping by the id). The whole process is still considerably faster than running the whole image through the contour generator. (15 seconds vs 50 seconds) and the result it pretty similar. The benefit of this approach lets you keep the 0 meter contour.