Hi all,

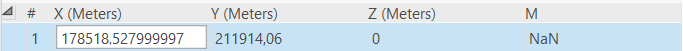

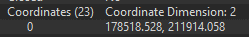

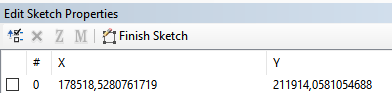

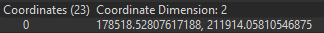

I noted that coordinate precision of the objects is changed when loaded into the ESRI FGDB.

Using the coordinate rounder, all objects in my migration process are rounded on three decimals (on a mm), and even the data in the source system have only 4 decimals.

I want to load them as such into the ESRI FGDB using FGDB writer. Source for writing is FFS file.

After successful loading process, I checked results in ArcMap and in Inspector. What I can see is that objects and vertices have many more decimals then in the data, which I loaded.

The resolution of the database is 0,0001 and tolerance is 0,001. This is also not clear to me, how I managed to write so many digits with 0,0001 resolution.

I removed all coordinate systems in order to avoid coordinate transformations. I tried to set same coordinate system on the target as on the source, but unfortunately, the result is the same.

Does someone have an idea why does extra vertices are appearing? How I can simply write the data which I have and not more or less?

Thanks in advance.