Hello everyone,

For one year, I’ve been running different workbenches daily (Windows Scheduler), reading from MSSQL tables to write into various hosted feature layers, used in various dashboards. I noticed an unusual consumption of the storage on our GIS server, coming from the “pgdata” folder of Arcgis Entreprise, so the underlying postgresql database (up to 20go additional Gb per month, while the size of all my hosted features stay constant at around 1gb only in total). After several tehnical sessions with ESRI, there is no doubts that this is caused by my FME workflows.

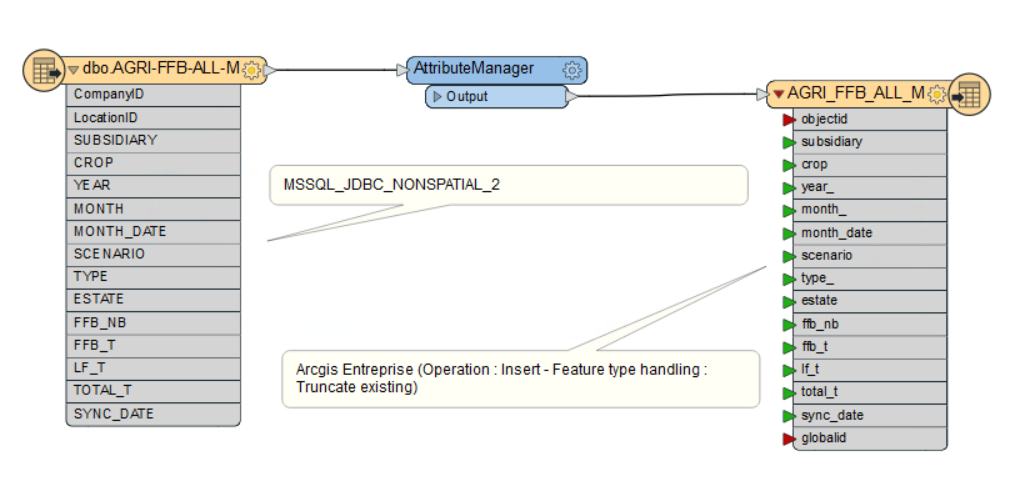

Those workbenches are far to be optimised (as I was only starting to use FME) but they are working fine. It was easy to truncate all features to insert new features, sometimes up to 150 000 features daily, for one layer (see below, simplified version). The inserted features are polygons, from the same source hosted feature layer, daily joined with most recent MSSQL data. NB: Objectid is removed with “attribute manager”. Beginning of 2024, I only inserted data for 2024 and not for previous years, so I expected my pgdata folder to decrease in size, which was not the case. Moreover, the performances of the dashboards decrease a bit over time. I suspect that the truncating is not working as intended and that features accumulate over time (ghost indices ?). Have you already encoutered that issue ?

I understand that using “change detector” to assign fme_operation is the correct practise and should prevent future problems, but I’m not sure if this will fix the the past accumulation issues. Is there a way to really overwrite an hosted feature layer with FME ? Am I missing something ?

Thank you in advance,

Arnaud