I have 50+ tables i want to setup a migration jobb for between MSSQL and PG.

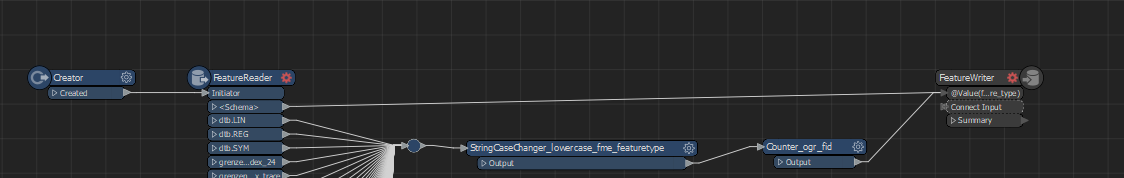

Fastest way to set this up is using the "Generate workspace" functionalities.

However using this, the table qualifier is set to dbo, default schema for MSSQL . In PG i am not using the same table qualifier name though, so I want to set to use X table qualifier for all the table to be written to PG, but it does not look like that is possible? Do i need to set it for each table manually?

Same goes for table name. I want to make it lowercase and remove any special characters automatically if possible.