is there any way to KMZ and Shape file reader get the link automatically when file or link change from website

for Example

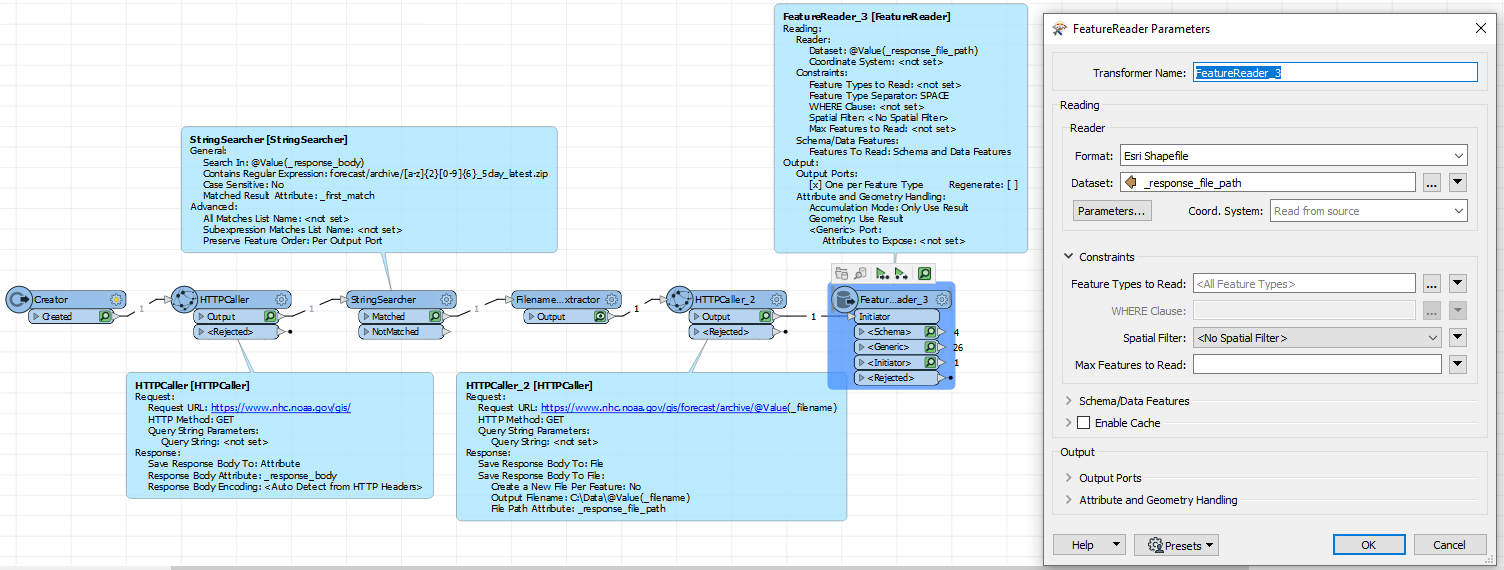

https://www.nhc.noaa.gov/gis/forecast/archive/al012022_5day_latest.zip

its today may be tomorrow

file name change with lk015856.zip and anything

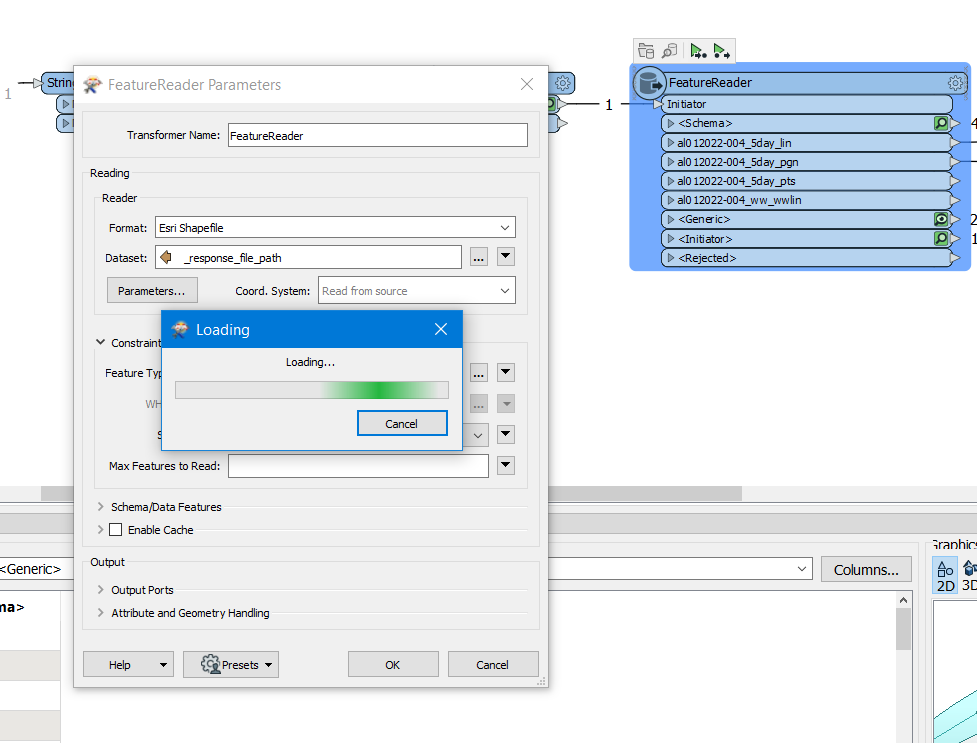

is there and parameter if i need to use

HTTPCaller to read the NHC website

HTTPCaller to read the NHC website