I had an engine queue pileup where job where just getting added to the queue and never ran. Since there were no failures none of my automated notifications were going out. So I completely missed this.

Is there a way I can set up hourly polling to check the number of jobs in the queue? Did a quick search for doing this with Rest API but nothing jumped out at me. Thanks for any suggestions you may have.

Best answer by hkingsbury

View original

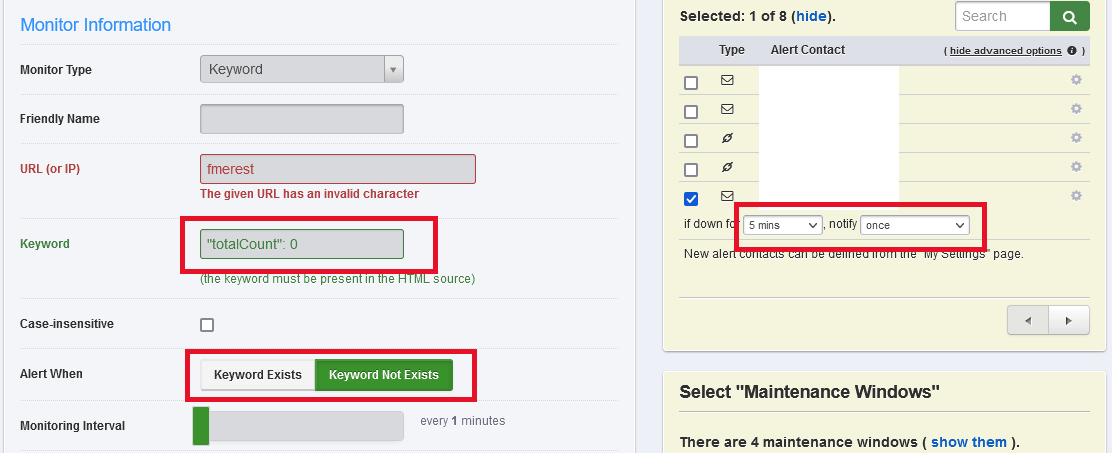

Ideally you could parse the json and say "if count > X for Y minutes send email"

Ideally you could parse the json and say "if count > X for Y minutes send email"