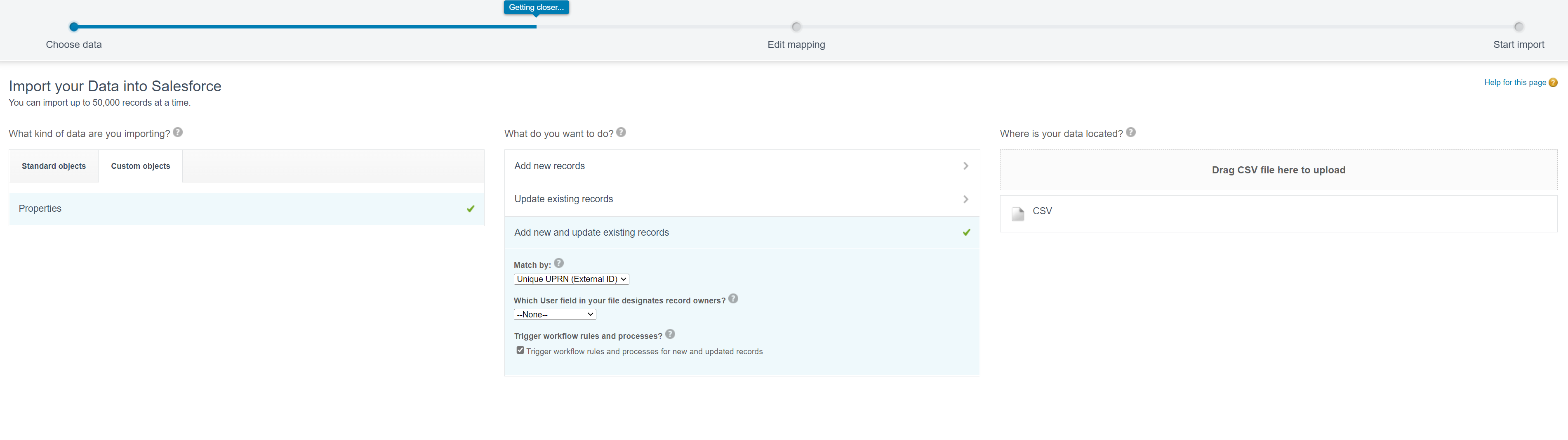

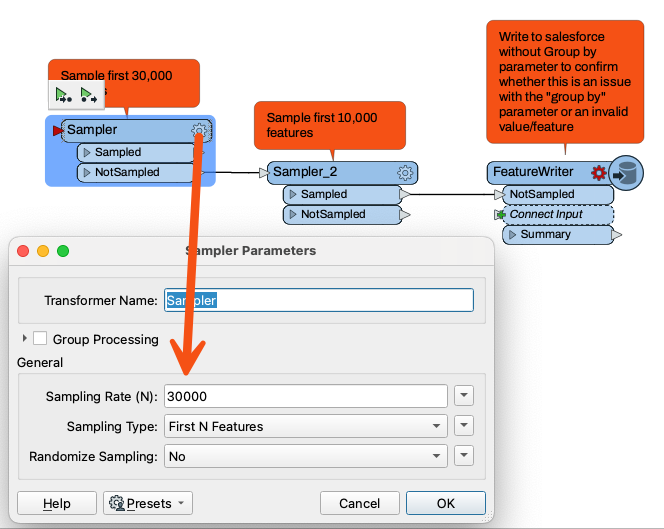

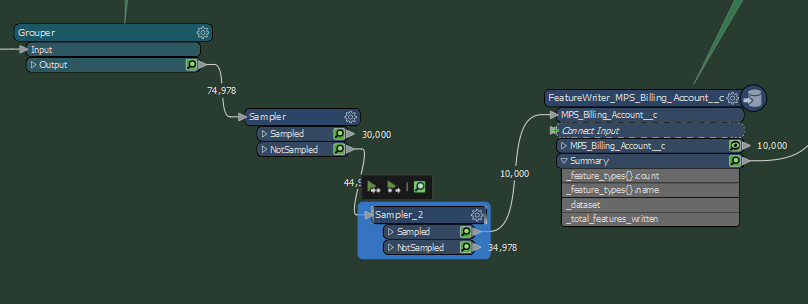

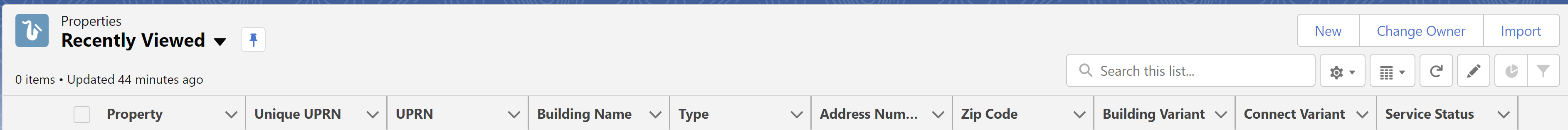

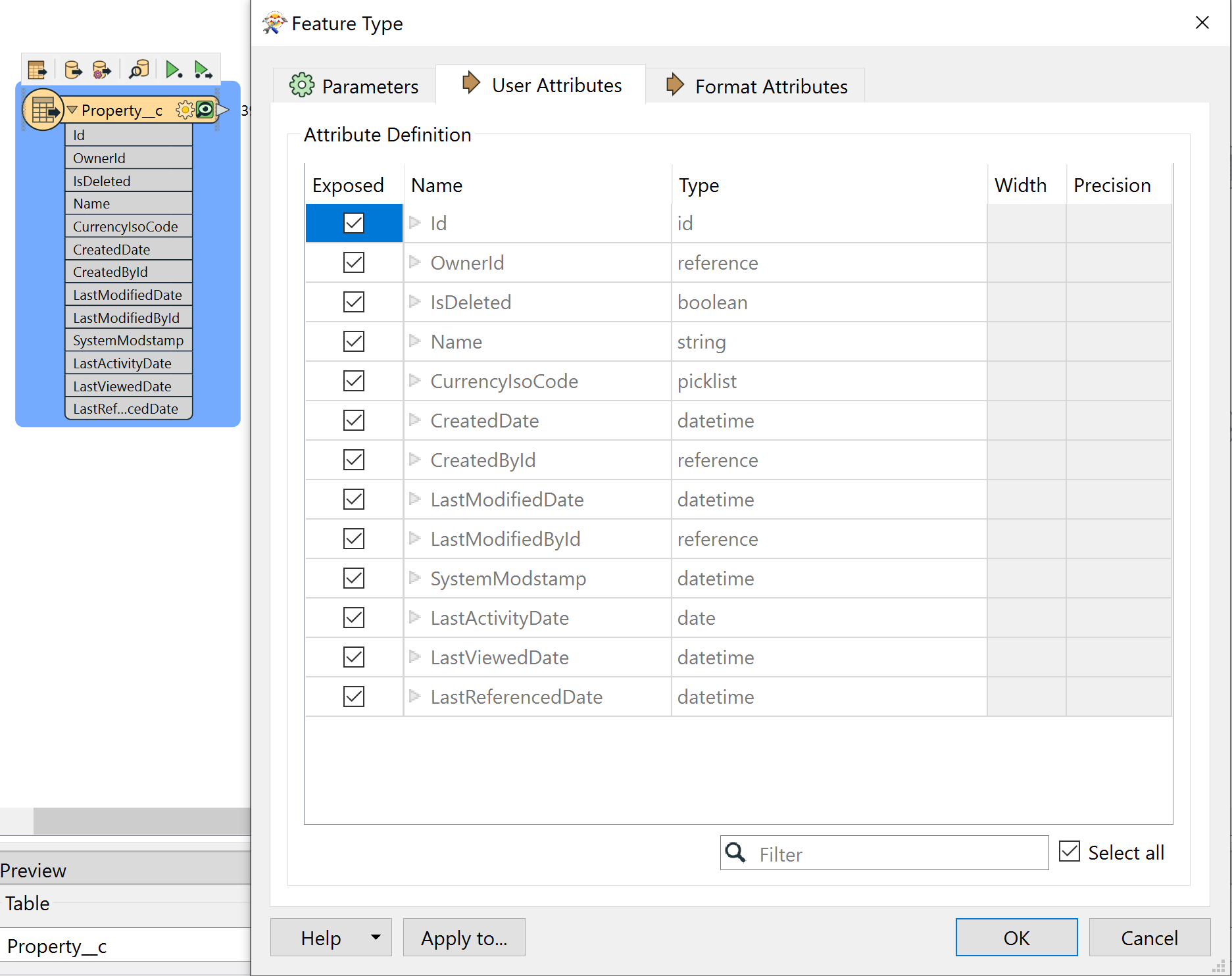

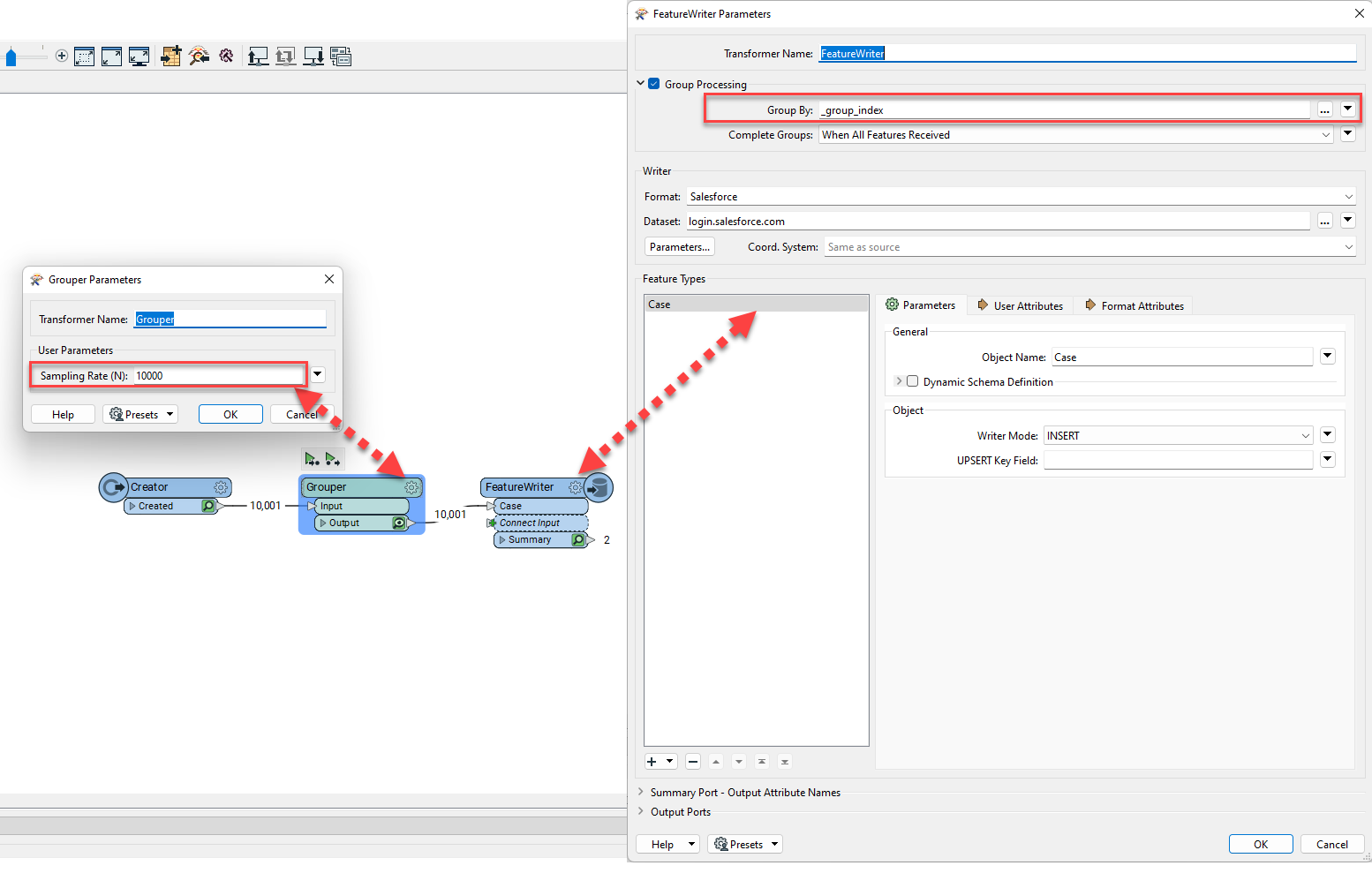

I would like to be able to use FME to bulk upload data to salesforce. We currently manually upload csv files with awareness that there is a limit of 50,000 rows per file (salesforce import wizard). I have seen it should be possible to automate this using the salesforce reader/writer. I have the API credentials for salesforce and I can see all the table objects available in FME. The issue is that the object we are trying to write to appears to have different fields than expected when read (these look to be metadata fields) - see image. When we run the process to manually import using the wizard the attributes are those in the csv uploaded (see image). Both of these tables are the custom table 'property(_c)', the saleforce URL indicates that this is the case. Do you need to change permissions on the object salesforce side or do anything specific with the reader/writer in FME to get this working?

Kind regards,

Antony

As for the attributes, can you send a screenshot of the fields and relationships tab in the Object Manager from the Salesforce setup? I see from the reader feature type parameter dialog it looks like you are missing attributes that are visible in your Salesforce web UI. FME is able to read and write to custom object as well as custom fields in Salesforce. However, since Salesforce is essentially a relational db, you may have attribute (columns) visible in the web UI that may belong to another object. The object manager should tell you the exact field name (custom fields are suffixed with __c) that you should use in the writer feature type. Otherwise, you could use the import from dataset option when adding the writer to the canvas and connect to your desired object from the import wizard.

As for the attributes, can you send a screenshot of the fields and relationships tab in the Object Manager from the Salesforce setup? I see from the reader feature type parameter dialog it looks like you are missing attributes that are visible in your Salesforce web UI. FME is able to read and write to custom object as well as custom fields in Salesforce. However, since Salesforce is essentially a relational db, you may have attribute (columns) visible in the web UI that may belong to another object. The object manager should tell you the exact field name (custom fields are suffixed with __c) that you should use in the writer feature type. Otherwise, you could use the import from dataset option when adding the writer to the canvas and connect to your desired object from the import wizard.