So I've had such a blessed, successful 24-hour run getting good advice on this forum, I'm going out on a limb and will keep it going...

As an FME Server shop, we leverage FME Server notifications quite a bit. Mostly the success/failure type with a custom template to inform the support team if things completed on schedule and whether everything is running normally. Show me when something is wrong and leave me alone the rest of the time. This is quite helpful.

In addition, I need more fine grained reporting for specific workbenches especially those powering integration with other teams. Oftentimes, that's basically an 'Emailer' transformer that sends out a custom message to custom audience. I posted about a related subject a while back.

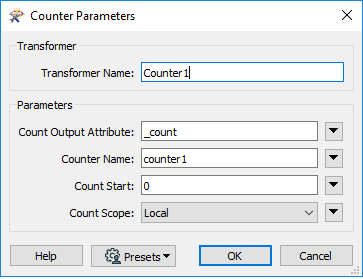

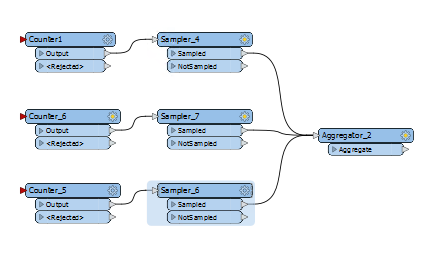

Now, I've recently put together a workspace that tries to gather various record counts across separate data streams. The easiest way I've found to do this is create any number of counters, starting at 0 and of type 'Local',

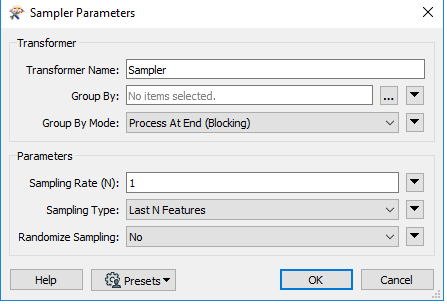

then have them feeding into a Sampler that grabs the last record it is fed,

then have them feeding into a Sampler that grabs the last record it is fed,

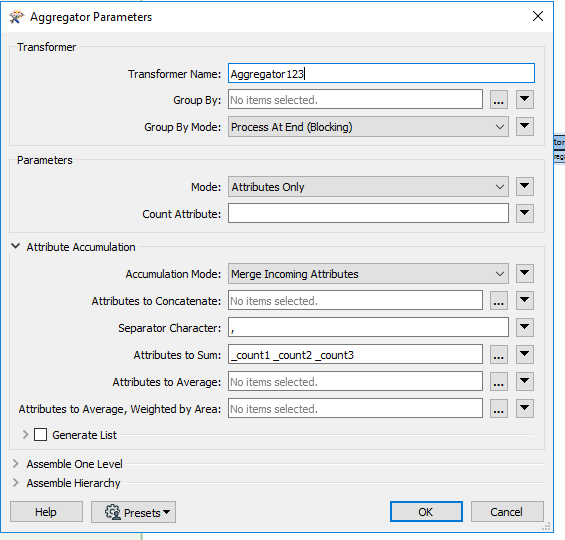

and have all these streams feed into an aggregator that sums across each counter attribute.

and have all these streams feed into an aggregator that sums across each counter attribute.

This works alright. But it really clutters up the workspace if you have several streams where you are counting ...

This works alright. But it really clutters up the workspace if you have several streams where you are counting ...

I am always leery of workspaces with connections in spaghetti fashion. Makes it confusing and easy to mess up in drag ' drop fashion.

I am always leery of workspaces with connections in spaghetti fashion. Makes it confusing and easy to mess up in drag ' drop fashion.

So long question short, is there a better way to gather this kind of information and feed an Emailer with it? Is there a way to get to my counter values without having them ride on a feature to the finish line? Could they be workspace parameter maybe? Any insights or experiences would be helpful. Thanks.