I was looking to do a data transformation task by making use of the following high level workflow:

- Run a data audit to compile a database table of filepaths of MapInfo tab files

- Compile data about the file discovered, included a field as to whether to transform this particular dataset (Field “TransformRequired” with Yes or No values).

- After our data audit is complete, use the Database table as an input list for the transformation, making use of the FeatureReader transformer

- Use a MapInfo Tab writer with a dynamic schema, using the “Schema from Schema feature” option as the Schema source.

The problem I have is that the input table list will include MapInfo tables which have the same file name, but which are in different file system folders. The paths I am trying to read in include:

\\Input\\GDA2020\\MapInfoV16\\PARCEL_VIEW.tab

\\Input\\GDA2020\\MapInfoV17\\PARCEL_VIEW.tab

\\Input\\GDA94\\PARCEL_VIEW_GDA94.TAB

\\Input\\GDA94\\PLAN_ZONE.tab

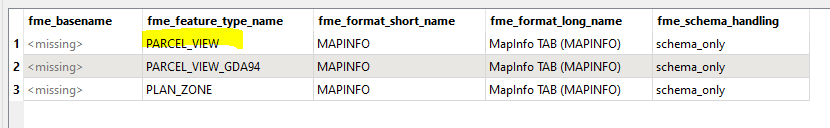

When reading the list into the FeatureReader transformer and attempting to use the Schema and Generic ports, I find that the unique schema list is output on the fme_feature_type, which means that I get 3 schema records, instead of my expected 4,

Also on the output, I find that only 3 tables are output - the duplicated mapinfo table name has the records for both the mapinfo tables "Parcel_view" tables.

In the image attached, showing the schema records from the FeatureReader, there are actually two mapinfo tables of name “PARCEL_VIEW” but in different system folders.

I was wondering if there is a way to have the FeatureReader return 4 records (one for each MapInfo table) and still use the “Schema from Schema feature” option in the Dynamic Writer.