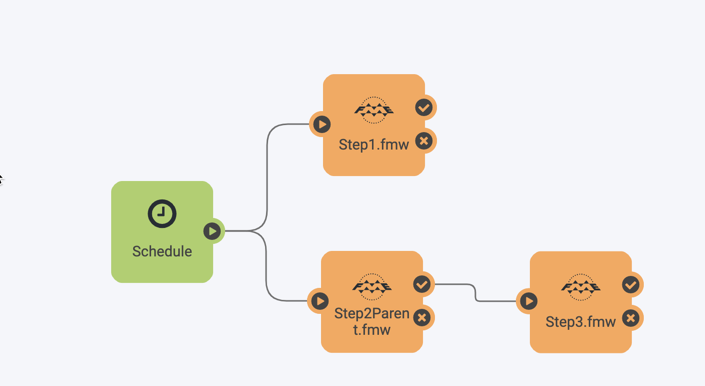

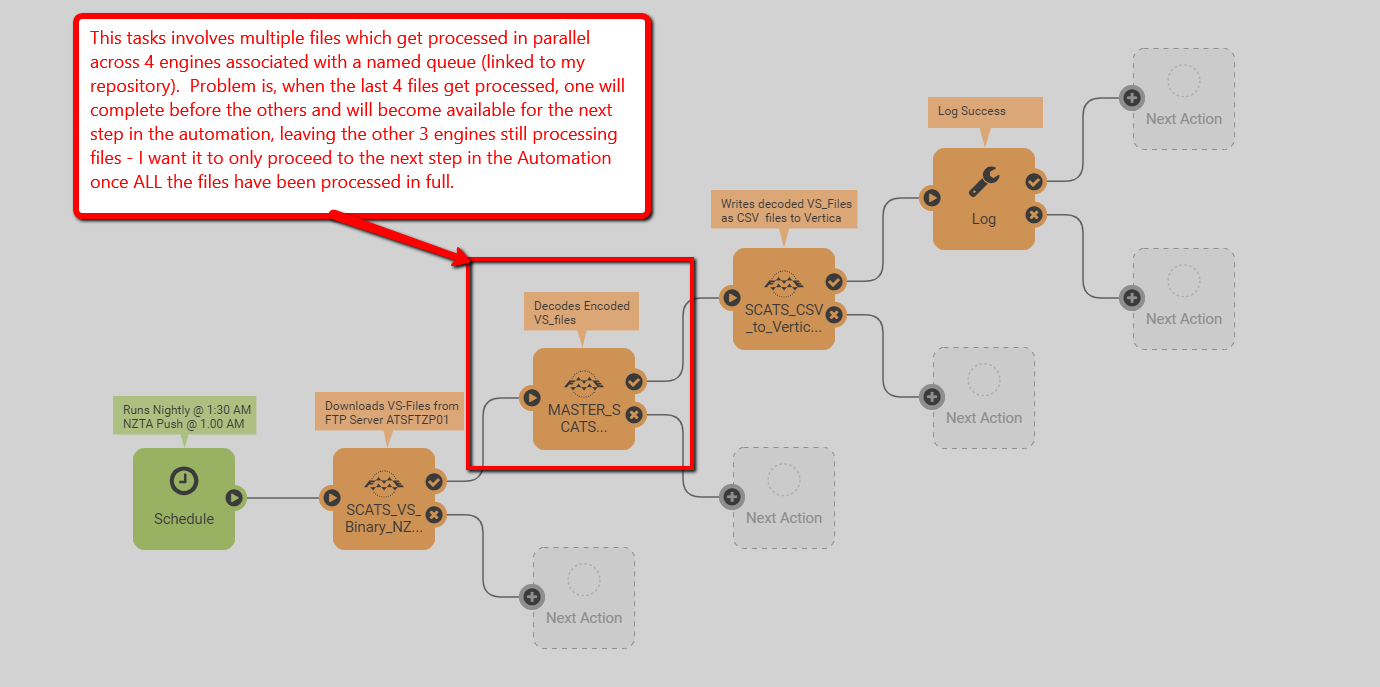

I have an FME Server Automation, triggered as a schedule, that has these basic steps:

- Reads binary files from a SFTPS site and writes to staging folder (A)

- Reads binary files from staging folder (A), decode files, and writes out to staging folder (B)

- Reads decoded files from the staging folder (B) and writes to a Vertica database

- Cleans up on staging folders (A) and (B), ready for next schedule

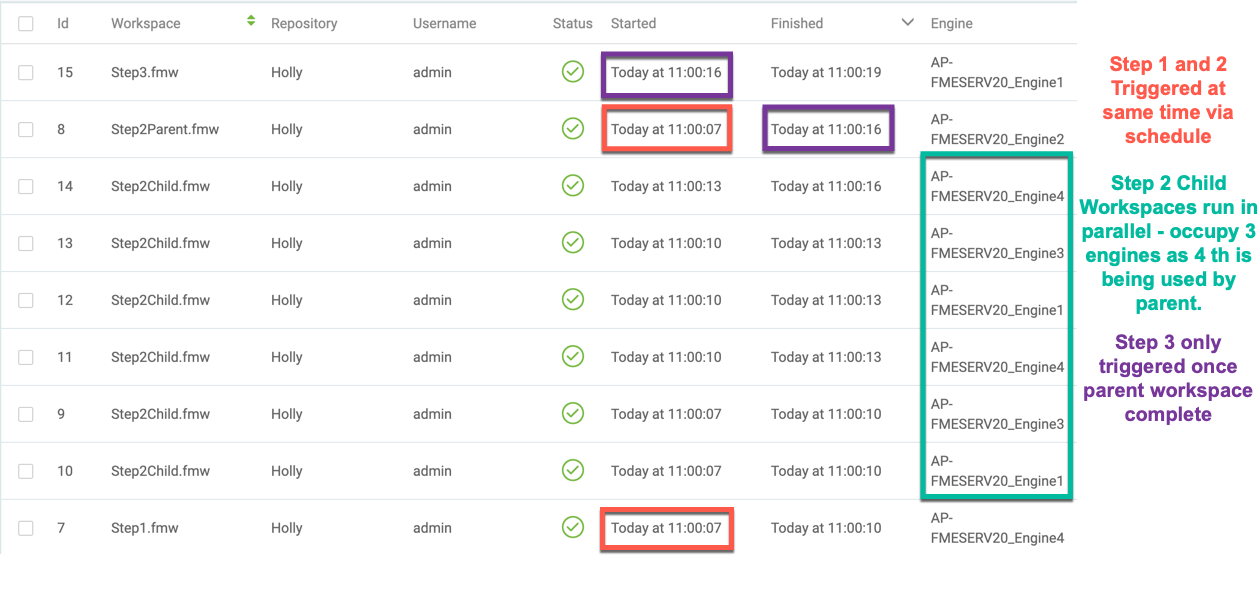

The decoding bit in step (2) takes its file input from a PATH Reader and can take some time to finish, so I run the workspace in parallel against a FME Server queue across 4 engines.

My issue is that the Automation moves to step (3) as soon as 1 of the 4 engines becomes available. Step (3) and step (4) are fast and complete before the remaining 3 engines running step (2) complete. This results is 3 files arriving in the staging folder (B) after the Automation has finished.

QUESTION: Is there a ”simple” way to make the Automation wait until step (2) has fully completed on all 4 engines, before moving to step (3)?

The Automation Merger looked promising, but I would have to split the output from the PATH Reader in Step (2) across 4 workspaces and use something like a Sampler to split the file feeds.

I looked at using a Decelerator and/or a FMEServerJobWaiter, but neither seemed very elegant – any ideas?

Regards

Mike