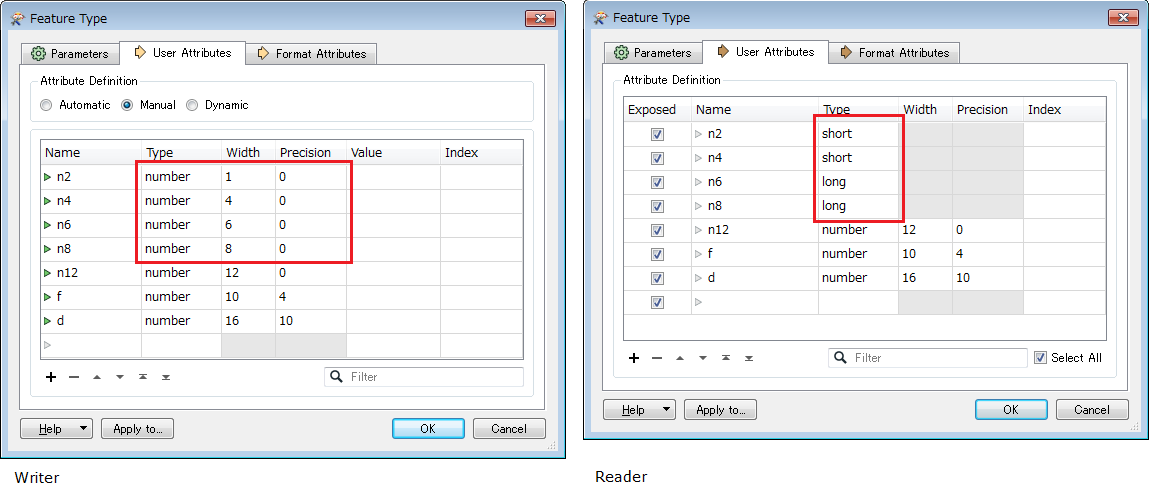

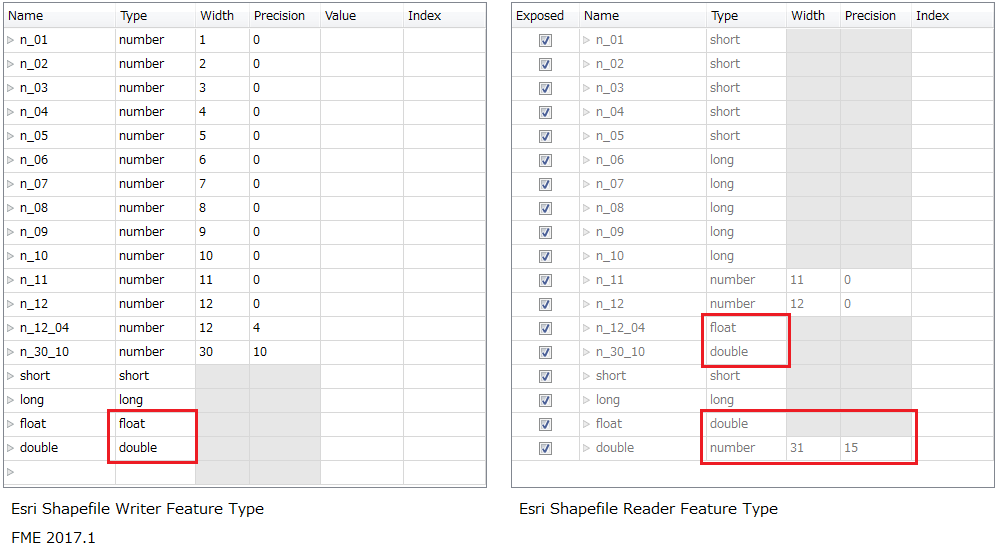

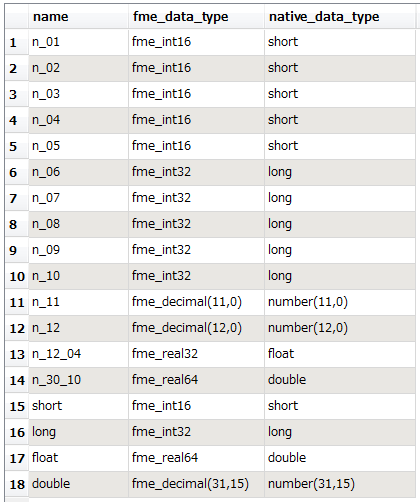

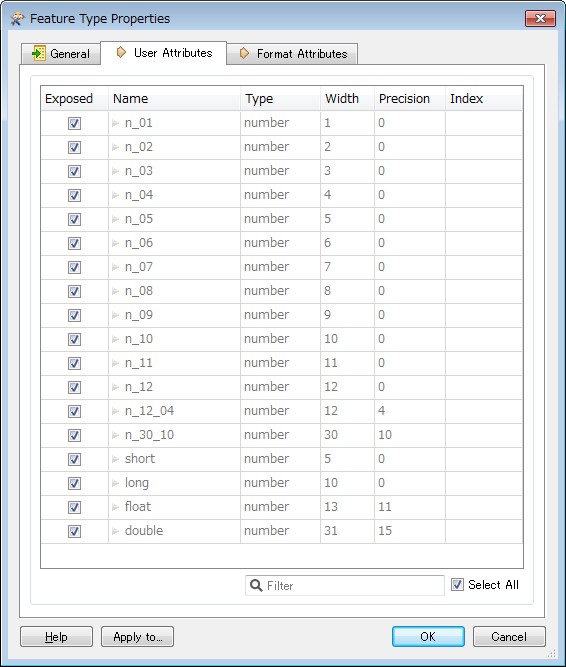

My shapefiles have attributes coded as short (integer with 6 digits) and FME2016 read them as long, so when I output them in a dynamic workflow, their width is 10.

- If I manually force to short, they are 5 digits long, which is not OK...

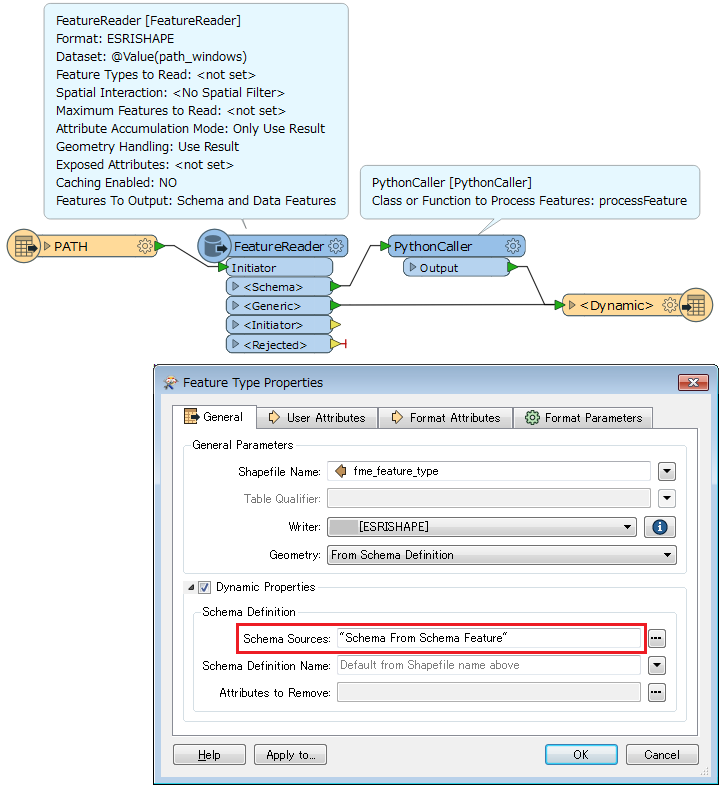

- If I manually force to number with 6 digits and precision 0, it is OK, but I can't do it dynamically with datasets of many shapefiles...

This is since FME2016 supports the short/long etc. datatypes for shapefiles. With FME2015, my data was understood correctly as number with 6 digits, in dynamic workflows.

Is it a bug or can I automatically force all such attributes to numbers with 6 digits ?