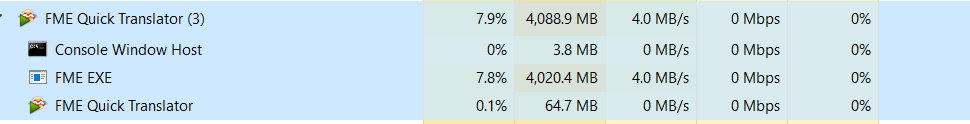

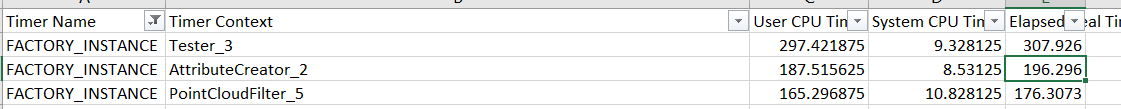

I have a pointcloud dataset of 1.8 billion points and I am filtering on a single component value. The pointcloudfiltering is taking 15 minutes and not blowing out CPU/Memory or IOPS, so it could be faster. However, the pointcloudfilter doesn't have ability to go parallel. Does anyone have an idea of how to make that run quicker...?

I also tested making the componenet spatial and then using a clip on the point cloud, but it seemed to be slightly slower in a couple of tests I ran.Enter your E-mail address. We'll send you an e-mail with instructions to reset your password.